using AI to build software and how to avoid the race to the average

Using AI to build software can result in a slippery slope towards developing average code.

PLEASE ACCEPT OUR. COOKIE POLICY

Fast Endpoints is a technology for developing APIs that has become very popular recently. It is promoted as being significantly faster than Controller APIs and around the same speed as Minimal APIs. We set about finding out whether this is correct and, given something approaching realistic conditions, what sort of speeds can be expected.

APIs have become a ubiquitous in modern systems. They are the default way to provide services to customers, either by themselves, or underneath a customer facing application and, in addition, they are often used as components in disconnected or micro-services systems.

When computer servers, either On-Premises or in the cloud approach 100% CPU and/or 100% memory usage for a sustained amount of time, errors occur. That's one of the reasons why speed is important in APIs. The faster the API, the quicker each request is processed and the less pressure the hardware underneath will be under. Therefore, faster APIs mean fewer errors will occur. The fewer errors your systems experience, the happier your customers will be, and the lower your support costs will be.

Conversely, the slower the API, the more pressure your hardware will be under, and the more likely it is that errors will occur, particularly during busy times. As a result you will be spending more on customer support. Sometimes an awful lot more.

.Net is one of the most popular platforms for developing APIs. In the .Net world, Controller based APIs, being the original style of APIs released by Microsoft, are still the most widely used. One criticism of these however is that they hook into a lot of unnecessary services whilst starting up, and as a consequence, performance is sub-optimal.

With the release of .Net 6, however Microsoft released Minimal APIs. As the name suggest Minimal APIs start up with minimum baggage, and therefore run at maximum speed. The developer is then free to choose which services they hook into.

The problem with Minimal APIs however is that the developer then has to do a lot of thinking about which services are required, and how to hook into those services. This means more discussion, more Architect involvement and usually more risk compared to the tried and trusted Controller APIs. In Controller APIs, a lot of services are provided out of the box and when problems arise there is a lot of material available on the web to help developers as they proceed through a project.

In response to the perceived shortcomings of Minimal APIs, Fast Endpoints were released. They provide a highly structured base from which to have the performance benefits of Minimal APIs and they are deliberately, very testable. Also, the documentation available on the website makes it reasonably easy for developers to incorporate new functionality as they proceed through a project.

We wanted to know just how fast Fast Endpoints and Minimal APIs are compared with the old and allegedly sluggish Controller APIs, particularly given the release of .Net 8. So using Visual Studio and C# we investigated.

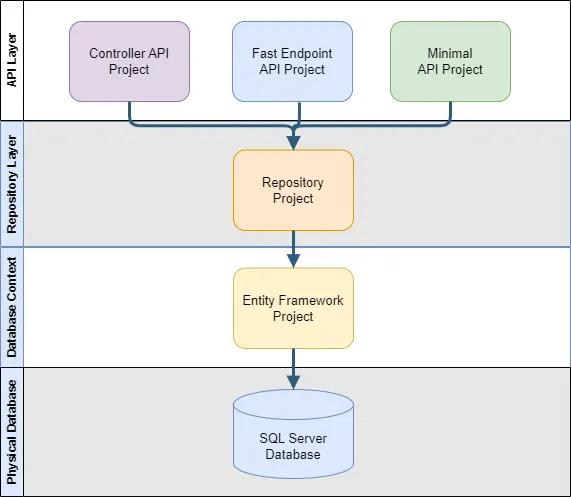

We created identical API projects for Controller APIs, Minimal APIs and Fast Endpoints. Each project had the same endpoints and called the same repository layer functions underneath for data entry and querying. The repository layers then called an Entity Framework data context project. We used an actual SQL Server database in order to simulate usage benchmarks that had some real world useability. The SQL Server database had 4 related tables populated with reference data and around 10,000 observations.

We published each of the API projects to Azure App Service. The APIs shared the same App Service Plan to start with, and then had their own App Service plan for the final test. One complication with using Azure App Service is that with most plans, other users of Azure share the same Azure infrastructure, which could have minor impacts on testing results.

The next step was to create a JMeter script to call each of the three API projects (Controllers, Minimal, FastEndpoints).

Each JMeter script had a call to do the following on its respective API:

Finally, we created Azure Load Testing resources and ran the JMeter scripts for each of the APIs under four different scenarios.

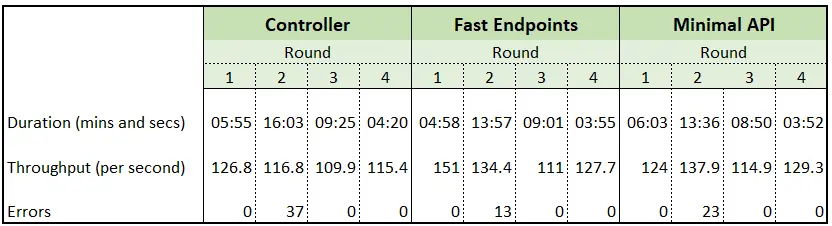

We used different configurations in order to ensure the results were accurate. Here is a summary of the results:

Azure Load Testing has a maximum number of consecutive users per Load Testing instance of 250. So we used 250 users in this round. However, after only a few minutes of testing it was clear from the number of errors that 250 users was too much for this level of App Service Plan and we stopped testing.

In this round Fast Endpoints was the fastest. The slowest was actually Minimal API and Controllers was around the same speed as Minimal API. There was a 23% benefit to using Fast Endpoints over Minimal APIs and 20% benefit of Fast Endpoints over Controllers.

In this round we tested each API consecutively and the testing cycle was fairly short. There may have been extraneous factors affecting the performance of Minimal APIs.

In this round the order is what you might expect. The fastest was Minimal API, which was 7% faster than Fast Endpoints and 21% faster than Controller APIs. Interestingly, at the end of this round, for each of the APIs there were some errors as the testing wound down.

In this round we tested each API consecutively and the testing cycle was around 15 minutes for each API. However, Minimal APIs were tested a few hours after the other two and so may have benefited from less activity on the associated Azure infrastructure.

In this round, once again we wanted to test each API at exactly the same time in order to rule out extraneous factors. In order to run all testing at the same time we increased the scale of the App Service Plan to Premium V3.

Whilst running all the tests simultaneously however we started seeing Sql exceptions from entity framework. To fix this we had to make a code change to enable retry from entity framework to Sql Server.

In this round, Minimal API was fastest, but only 3.5% than Fast Endpoints and only 4.5% faster than Controller APIs. It seems that running all requests simultaneously on the higher specification App Service Plan, and perhaps the code change had evened out the differences between the API technologies.

Interestingly, under the new higher scale App Service Plan each API was delivering over 100 transactions per second, which meant the entire plan was delivering well over 300 transactions per second.

One last round. In this round we decided to go back to testing consecutively. In order to rule out extraneous factors we opted to test over a smaller number of repetitions so that the testing took place at almost the same time. Also, we decided to test only 50 users so that the App Service Plan would not be overburdened.

The result for this round was probably the best estimate of the speed difference between the technologies, along with Round 2. Minimal APIs was once again fastest, followed by Fast Endpoints (1.3% behind) and then Controller APIs (12% behind Minimal APIs).

As expected, Minimal APIs are the fastest, following by Fast Endpoints and then Controller APIs. However, in the context of an n-tier application, Minimal APIs are only between 12 to 20% faster than Controller APIs, depending on the circumstances.

Overall the performance of all flavours of .Net 8 APIs was very impressive. For around £70 per month, an S1 plan can comfortably support 50 simultaneous users. For around £300 a Premium v2 plan can support well over 300 simultaneous users.

Minimal APIs was fastest the technology, and so if speed is everything then they are the recommended option.

Fast Endpoints offers the most testable technology and has a neat, structured pattern to development. For most new projects Fast Endpoints is our recommended option.

There are a lot of variables to consider when upgrading an application, and the fastest endpoint technology is not always the most important factor. The improved speed of Fast Endpoints and Minimal APIs aren't a compelling reason of themselves to switch away from Controller APIs.

In cases where an application exhibits a low amount of bugs, but is in need of an upgrade, our recommendation would be to stay with Controller APIs.

If however, an existing Controller API project is poorly organised, has bugs and is in need of a more testable structure, then switching to Fast Endpoints is recommended.

Load testing shows up problems that simply don't show up in other forms of testing. Round 0 showed that applications won't just run slowly if the App Service Plan isn't sufficient, they will error, a lot.

The various rounds of our testing shows the importance of matching your architecture to the testing setup. The bug that appeared in Round 3 of our testing did not appear in most of rounds of testing however, it would appear in a real world scenario where different applications use the same database.

Finally it is important to test under a realistic load scenario and not just the most users possible. Load Testing can get quite expensive so start with a moderate load scenario for your applications.

If you have a project that you are looking to upgrade to .Net 8, migrate to Azure or would like to load test, we'd be happy to discuss it with you.

Using AI to build software can result in a slippery slope towards developing average code.

Using DotNet 8 and Aspire With Azure Container Apps Makes Kubernetes Enabled Apps Incredibly Easy

Moving to a micro-services architecture can sometimes seem daunting due to the amount of technical choices to be made and the amount of planning involved. Often however, the migration can be made in manage-able steps. Here is one classic example.

Fast Endpoints have become very popular with .Net developers recently, but how much faster are they than regular Controller endpoints using .Net 8

GPT4 and other tools have good text summarisation capabilities but only Deep Mind is providing the real breakthroughs.

Managing Infrastructure State can be tricky in the cloud. AZTFY and Terraform can help with management