using AI to build software and how to avoid the race to the average

Using AI to build software can result in a slippery slope towards developing average code.

PLEASE ACCEPT OUR. COOKIE POLICY

One way of defining Cloud-Native applications is the following:

Cloud-Native applications can do data entry, and they can be n-tier, but when they need to communicate with other applications they should do so in a loosely coupled fashion, usually through publishing a message. When applications do not depend upon communication with external services with highly variable latencies, auto-scaling via Kubernetes or other methods becomes far more effective.

Migrating from a monolithic n-tier application to a set of micro-services applications can seem a daunting exercise, and many organisations become bogged down in endless analysis, and disagrements over minor technical choices.

As a pragmatic choice, organisations often simply lift and shift their n-tier applications to the cloud, and as a consequence then miss most of the benefits of being in the cloud in the first place.

There are often clear and obvious improvements that can be made to n-tier applications by moving peripheral functionality out of the application and into messaging based components and micro-services.

Here is a classic example based on an actual scenario.

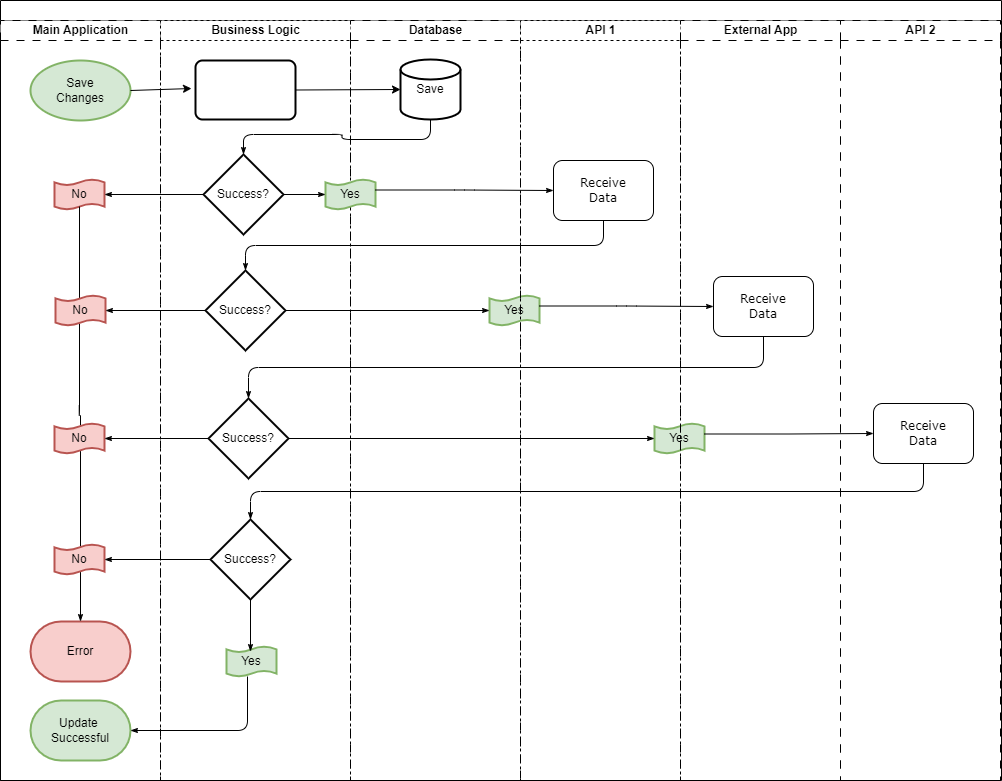

In the diagram below an n-tier application provides content to be used in other websites and applications. After a record was saved the application made the following calls before the data entry screen was finally updated with a success message:

This architecture created a tight coupling between the main application and three others. If any of the three calls failed, an error would occur in the application before the main application's screen was updated, even though the database update was successful.

Whilst each of the three events after the database update should occur at some point, none of these were intrinsically related to the transaction itself, and their failure should not have prevented the main application from continuing. In addition, the success or failure of each of the three events that occur after the database update should have been independant.

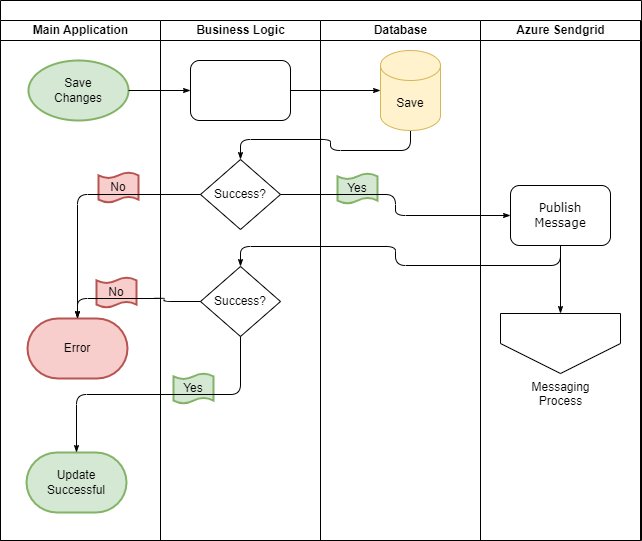

The Cloud-Native architecture is given below:

In the revised architecture, the application saves the record and then simply publishes a message to Azure Sendgrid (we could have used Azure ServiceBus). The result of the database save is then returned to the Content Editor in all cases.

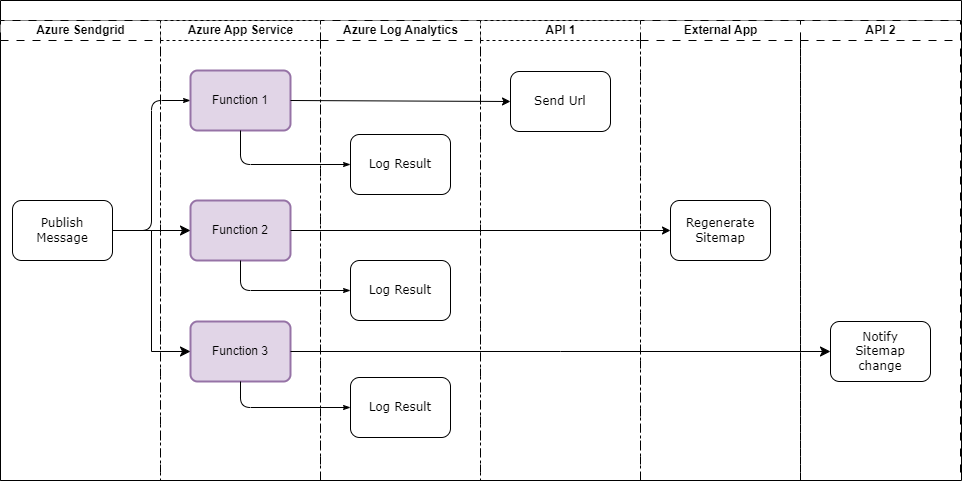

The calls to the APIs and the other application are then made by 3 micro-services which receive notifications from Azure Sendgrid. Azure Functions are a perfect fit for the micro-services.

The benefits of the Cloud-Native architecture are that the main application just fulfills its main purpose. It then becomes far easier to Integration Test and Load Test and without the latency vagaries of having to make calls to external sources, the application will be far easier to auto-scale.

In addition, the functionality of the calls to the APIs and the other application can all be tested in isolation as there is no longer any unnecessary coupling between these events.

It sounds simple when looking at the diagrams above but similar situations often lurk in codebases without anybody being aware. If these situations are not addressed, applications can be very difficult to Integration Test, End to End Test, Load Test or auto-scale in an efficient manner. We have experience at finding these situations, even in large code-bases.

What do you think?

We'd love to hear your thoughts. Does this situation ring a bell?

If you are having trouble migrating to the cloud or feel your applications haven't benefited from being in the cloud, please contact us. It's amazing what a code review could turn up and we would be happy to discuss it, without obligation.

Using AI to build software can result in a slippery slope towards developing average code.

Using DotNet 8 and Aspire With Azure Container Apps Makes Kubernetes Enabled Apps Incredibly Easy

Moving to a micro-services architecture can sometimes seem daunting due to the amount of technical choices to be made and the amount of planning involved. Often however, the migration can be made in manage-able steps. Here is one classic example.

Fast Endpoints have become very popular with .Net developers recently, but how much faster are they than regular Controller endpoints using .Net 8

GPT4 and other tools have good text summarisation capabilities but only Deep Mind is providing the real breakthroughs.

Managing Infrastructure State can be tricky in the cloud. AZTFY and Terraform can help with management