using AI to build software and how to avoid the race to the average

Using AI to build software can result in a slippery slope towards developing average code.

PLEASE ACCEPT OUR. COOKIE POLICY

Storing sensitive data such as usernames and passwords in a yaml file is, as we all know, a very bad security practice. Using Azure Key Vault and downloading from that in a devops pipeline can remove this problem, and help develop a secure devops pipeline in Azure.

When I went to implement this in my devops pipelines however I noticed that most articles I read on the subject do not cover the setup process from start to finish, and there are a few gotchas in the process. Therefore I decided to write this article which hopefully explains how to set it all up from start to finish.

There are basically five steps in the process which are:

You need to create a Service Principal for this process and the easiest way to do this is in the Azure Portal in the Bash Shell. If you have more than one subscription however you need to make sure you have the correct subscription set. You can do that by doing the following:

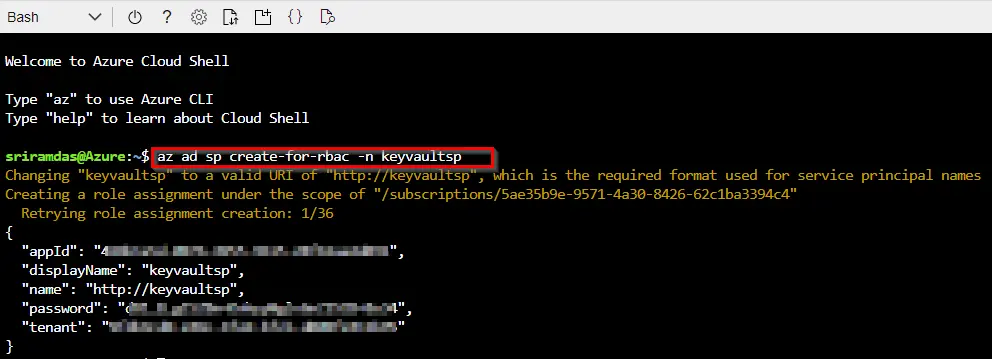

Now to create a Service Principal. If you have been doing things in Azure for a while you will have lots of different Service Principals already but its best to setup a new one for this purpose.

To create a Service Principal that is configured to work in pipelines do the following:

az ad sp create-for-rbac -n keyvaultsp

If you don't have a Key Vault already then you need to create one. You can create it from the portal or from the command line. Follow these instructions if you want to create one from the portal.

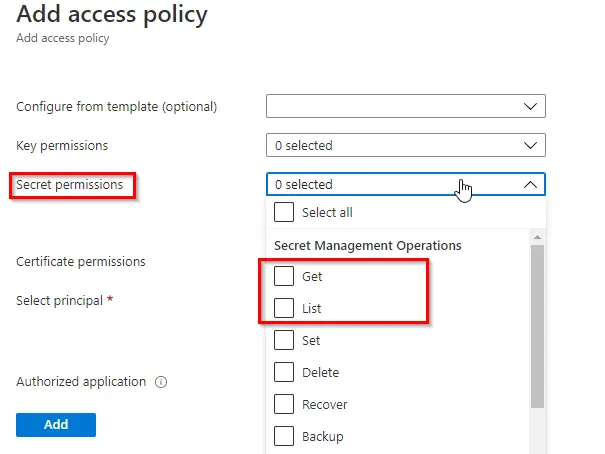

To add Access Policies to the Service Principal created earlier so that it has access to the Key Vault do the following:

You need to have a Service Connection in your project that has permissions to read from the Resource Group that your Key Vault is in. In the Azure Portal note the Subscription and the Resource Group your Key Vault is in.

In Azure Devops do the following:

You can go through all the existing ones to see if there is a Service connection to the Subscription and Resource Group that your Key Vault is in and/or you can create a new one by doing the following:

Access your Key Vault in your yaml file by using the AzureKeyVault task.

Now you will have the following in your yaml file:

- task: AzureKeyVault@1

inputs:

azureSubscription: 'xxxxxxxxxxxxxxx'

KeyVaultName: 'xxxxxxxxxxxxx'

SecretsFilter: '*'

RunAsPreJob: false

Now you can use the Key Vault Values in the yaml file. The following is an example of a SqlAzureDeployment task which uses two secrets in a Key Vault called SqlUserName and SqlUserPassword

- task: SqlAzureDacpacDeployment@1

inputs:

azureSubscription: 'xxxxxxxxxxx (xxxxxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxx)'AuthenticationType: 'server'ServerName: 'xxxxxsqlserver.database.windows.net,1433'

DatabaseName: 'WebContent'SqlUsername: $(SqlUsername)SqlPassword: $(SqlPassword)DacpacFile: '$(System.DefaultWorkingDirectory)/bin/Debug/WebContentDbDeploy.dacpac'AdditionalArguments: '/p:BlockOnPossibleDataLoss=false /p:AllowDropBlockingAssemblies=true'PublishProfile: '$(System.DefaultWorkingDirectory)/bin/Debug/WebContentDbDeploy.publish.xml'

Using AI to build software can result in a slippery slope towards developing average code.

Using DotNet 8 and Aspire With Azure Container Apps Makes Kubernetes Enabled Apps Incredibly Easy

Moving to a micro-services architecture can sometimes seem daunting due to the amount of technical choices to be made and the amount of planning involved. Often however, the migration can be made in manage-able steps. Here is one classic example.

Fast Endpoints have become very popular with .Net developers recently, but how much faster are they than regular Controller endpoints using .Net 8

GPT4 and other tools have good text summarisation capabilities but only Deep Mind is providing the real breakthroughs.

Managing Infrastructure State can be tricky in the cloud. AZTFY and Terraform can help with management